diff --git a/docs/detection/annotators.md b/docs/detection/annotators.md

index e1071121c..afe64ace0 100644

--- a/docs/detection/annotators.md

+++ b/docs/detection/annotators.md

@@ -28,26 +28,28 @@ Annotators accept detections and apply box or mask visualizations to the detecti

-=== "RoundBox"

+

=== "BoxCorner"

@@ -234,7 +236,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -255,7 +258,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -283,7 +287,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -314,7 +319,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -341,24 +347,32 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

-=== "Crop"

+

=== "Blur"

@@ -377,7 +391,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -398,7 +413,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -429,7 +445,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -458,7 +475,8 @@ Annotators accept detections and apply box or mask visualizations to the detecti

- { align=center width="800" }

+ { align=center width="800" }

@@ -479,7 +497,31 @@ Annotators accept detections and apply box or mask visualizations to the detecti

-

+ { align=center width="800" }

+

+

+

+=== "Comparison"

+

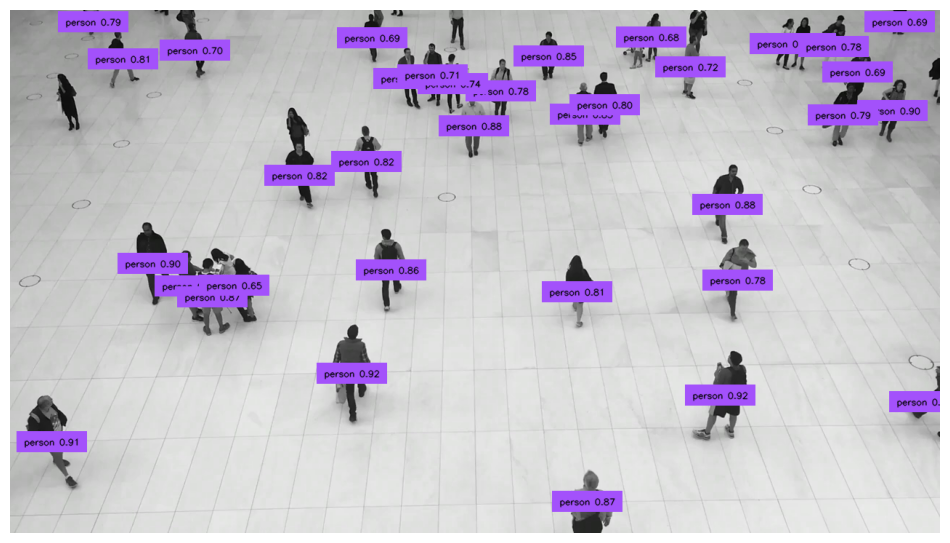

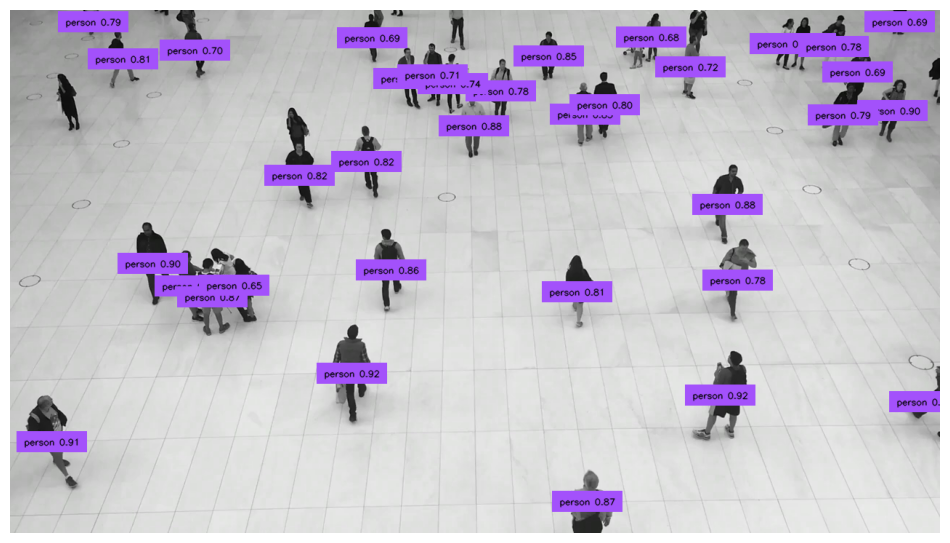

+ ```python

+ import supervision as sv

+

+ image = ...

+ detections_1 = sv.Detections(...)

+ detections_2 = sv.Detections(...)

+

+ comparison_annotator = sv.ComparisonAnnotator()

+ annotated_frame = comparison_annotator.annotate(

+ scene=image.copy(),

+ detections_1=detections_1,

+ detections_2=detections_2

+ )

+ ```

+

+

+

+ { align=center width="800" }

@@ -622,6 +664,12 @@ Annotators accept detections and apply box or mask visualizations to the detecti

:::supervision.annotators.core.BackgroundOverlayAnnotator

+

+

+:::supervision.annotators.core.ComparisonAnnotator

+

diff --git a/supervision/__init__.py b/supervision/__init__.py

index e2ed4bb75..2b2a0082d 100644

--- a/supervision/__init__.py

+++ b/supervision/__init__.py

@@ -14,6 +14,7 @@

BoxCornerAnnotator,

CircleAnnotator,

ColorAnnotator,

+ ComparisonAnnotator,

CropAnnotator,

DotAnnotator,

EllipseAnnotator,

@@ -76,6 +77,7 @@

scale_boxes,

xcycwh_to_xyxy,

xywh_to_xyxy,

+ xyxy_to_polygons,

)

from supervision.draw.color import Color, ColorPalette

from supervision.draw.utils import (

@@ -136,6 +138,7 @@

"ColorAnnotator",

"ColorLookup",

"ColorPalette",

+ "ComparisonAnnotator",

"ConfusionMatrix",

"CropAnnotator",

"DetectionDataset",

@@ -222,4 +225,5 @@

"scale_image",

"xcycwh_to_xyxy",

"xywh_to_xyxy",

+ "xyxy_to_polygons",

]

diff --git a/supervision/annotators/core.py b/supervision/annotators/core.py

index 02ab47d6f..a2b2ed565 100644

--- a/supervision/annotators/core.py

+++ b/supervision/annotators/core.py

@@ -16,10 +16,16 @@

)

from supervision.config import CLASS_NAME_DATA_FIELD, ORIENTED_BOX_COORDINATES

from supervision.detection.core import Detections

-from supervision.detection.utils import clip_boxes, mask_to_polygons, spread_out_boxes

+from supervision.detection.utils import (

+ clip_boxes,

+ mask_to_polygons,

+ polygon_to_mask,

+ spread_out_boxes,

+ xyxy_to_polygons,

+)

from supervision.draw.color import Color, ColorPalette

-from supervision.draw.utils import draw_polygon

-from supervision.geometry.core import Position

+from supervision.draw.utils import draw_polygon, draw_rounded_rectangle, draw_text

+from supervision.geometry.core import Point, Position, Rect

from supervision.utils.conversion import (

ensure_cv2_image_for_annotation,

ensure_pil_image_for_annotation,

@@ -2527,6 +2533,9 @@ def annotate(

detections=detections

)

```

+

+

"""

assert isinstance(scene, np.ndarray)

crops = [

@@ -2683,3 +2692,261 @@ def annotate(self, scene: ImageType, detections: Detections) -> ImageType:

np.copyto(scene, colored_mask)

return scene

+

+

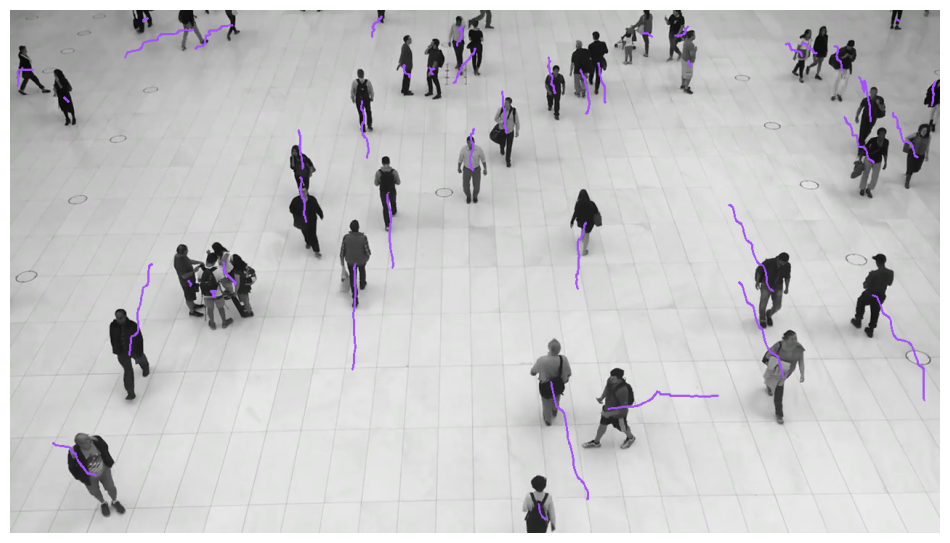

+class ComparisonAnnotator:

+ """

+ Highlights the differences between two sets of detections.

+ Useful for comparing results from two different models, or the difference

+ between a ground truth and a prediction.

+

+ If present, uses the oriented bounding box data.

+ Otherwise, if present, uses a mask.

+ Otherwise, uses the bounding box data.

+ """

+

+ def __init__(

+ self,

+ color_1: Color = Color.RED,

+ color_2: Color = Color.GREEN,

+ color_overlap: Color = Color.BLUE,

+ *,

+ opacity: float = 0.75,

+ label_1: str = "",

+ label_2: str = "",

+ label_overlap: str = "",

+ label_scale: float = 1.0,

+ ):

+ """

+ Args:

+ color_1 (Color): Color of areas only present in the first set of

+ detections.

+ color_2 (Color): Color of areas only present in the second set of

+ detections.

+ color_overlap (Color): Color of areas present in both sets of detections.

+ opacity (float): Annotator opacity, from `0` to `1`.

+ label_1 (str): Label for the first set of detections.

+ label_2 (str): Label for the second set of detections.

+ label_overlap (str): Label for areas present in both sets of detections.

+ label_scale (float): Controls how large the labels are.

+ """

+

+ self.color_1 = color_1

+ self.color_2 = color_2

+ self.color_overlap = color_overlap

+

+ self.opacity = opacity

+ self.label_1 = label_1

+ self.label_2 = label_2

+ self.label_overlap = label_overlap

+ self.label_scale = label_scale

+ self.text_thickness = int(self.label_scale + 1.2)

+

+ @ensure_cv2_image_for_annotation

+ def annotate(

+ self, scene: ImageType, detections_1: Detections, detections_2: Detections

+ ) -> ImageType:

+ """

+ Highlights the differences between two sets of detections.

+

+ Args:

+ scene (ImageType): The image where detections will be drawn.

+ `ImageType` is a flexible type, accepting either `numpy.ndarray`

+ or `PIL.Image.Image`.

+ detections_1 (Detections): The first set of detections or predictions.

+ detections_2 (Detections): The second set of detections to compare or

+ ground truth.

+

+ Returns:

+ The annotated image.

+

+ Example:

+ ```python

+ import supervision as sv

+

+ image = ...

+ detections_1 = sv.Detections(...)

+ detections_2 = sv.Detections(...)

+

+ comparison_annotator = sv.ComparisonAnnotator()

+ annotated_frame = comparison_annotator.annotate(

+ scene=image.copy(),

+ detections_1=detections_1,

+ detections_2=detections_2

+ )

+ ```

+

+

+ """

+ assert isinstance(scene, np.ndarray)

+ if detections_1.is_empty() and detections_2.is_empty():

+ return scene

+

+ use_obb = self._use_obb(detections_1, detections_2)

+ use_mask = self._use_mask(detections_1, detections_2)

+

+ if use_obb:

+ mask_1 = self._mask_from_obb(scene, detections_1)

+ mask_2 = self._mask_from_obb(scene, detections_2)

+

+ elif use_mask:

+ mask_1 = self._mask_from_mask(scene, detections_1)

+ mask_2 = self._mask_from_mask(scene, detections_2)

+

+ else:

+ mask_1 = self._mask_from_xyxy(scene, detections_1)

+ mask_2 = self._mask_from_xyxy(scene, detections_2)

+

+ mask_overlap = mask_1 & mask_2

+ mask_1 = mask_1 & ~mask_overlap

+ mask_2 = mask_2 & ~mask_overlap

+

+ color_layer = np.zeros_like(scene, dtype=np.uint8)

+ color_layer[mask_overlap] = self.color_overlap.as_bgr()

+ color_layer[mask_1] = self.color_1.as_bgr()

+ color_layer[mask_2] = self.color_2.as_bgr()

+

+ scene[mask_overlap] = (1 - self.opacity) * scene[

+ mask_overlap

+ ] + self.opacity * color_layer[mask_overlap]

+ scene[mask_1] = (1 - self.opacity) * scene[mask_1] + self.opacity * color_layer[

+ mask_1

+ ]

+ scene[mask_2] = (1 - self.opacity) * scene[mask_2] + self.opacity * color_layer[

+ mask_2

+ ]

+

+ self._draw_labels(scene)

+

+ return scene

+

+ @staticmethod

+ def _use_obb(detections_1: Detections, detections_2: Detections) -> bool:

+ assert not detections_1.is_empty() or not detections_2.is_empty()

+ is_obb_1 = ORIENTED_BOX_COORDINATES in detections_1.data

+ is_obb_2 = ORIENTED_BOX_COORDINATES in detections_2.data

+ return (

+ (is_obb_1 and is_obb_2)

+ or (is_obb_1 and detections_2.is_empty())

+ or (detections_1.is_empty() and is_obb_2)

+ )

+

+ @staticmethod

+ def _use_mask(detections_1: Detections, detections_2: Detections) -> bool:

+ assert not detections_1.is_empty() or not detections_2.is_empty()

+ is_mask_1 = detections_1.mask is not None

+ is_mask_2 = detections_2.mask is not None

+ return (

+ (is_mask_1 and is_mask_2)

+ or (is_mask_1 and detections_2.is_empty())

+ or (detections_1.is_empty() and is_mask_2)

+ )

+

+ @staticmethod

+ def _mask_from_xyxy(scene: np.ndarray, detections: Detections) -> np.ndarray:

+ mask = np.zeros(scene.shape[:2], dtype=np.bool_)

+ if detections.is_empty():

+ return mask

+

+ resolution_wh = scene.shape[1], scene.shape[0]

+ polygons = xyxy_to_polygons(detections.xyxy)

+

+ for polygon in polygons:

+ polygon_mask = polygon_to_mask(polygon, resolution_wh=resolution_wh)

+ mask |= polygon_mask.astype(np.bool_)

+ return mask

+

+ @staticmethod

+ def _mask_from_obb(scene: np.ndarray, detections: Detections) -> np.ndarray:

+ mask = np.zeros(scene.shape[:2], dtype=np.bool_)

+ if detections.is_empty():

+ return mask

+

+ resolution_wh = scene.shape[1], scene.shape[0]

+

+ for polygon in detections.data[ORIENTED_BOX_COORDINATES]:

+ polygon_mask = polygon_to_mask(polygon, resolution_wh=resolution_wh)

+ mask |= polygon_mask.astype(np.bool_)

+ return mask

+

+ @staticmethod

+ def _mask_from_mask(scene: np.ndarray, detections: Detections) -> np.ndarray:

+ mask = np.zeros(scene.shape[:2], dtype=np.bool_)

+ if detections.is_empty():

+ return mask

+ assert detections.mask is not None

+

+ for detections_mask in detections.mask:

+ mask |= detections_mask.astype(np.bool_)

+ return mask

+

+ def _draw_labels(self, scene: np.ndarray) -> None:

+ """

+ Draw the labels, explaining what each color represents, with automatically

+ computed positions.

+

+ Args:

+ scene (np.ndarray): The image where the labels will be drawn.

+ """

+ margin = int(50 * self.label_scale)

+ gap = int(40 * self.label_scale)

+ y0 = int(50 * self.label_scale)

+ height = int(50 * self.label_scale)

+

+ marker_size = int(20 * self.label_scale)

+ padding = int(10 * self.label_scale)

+ text_box_corner_radius = int(10 * self.label_scale)

+ marker_corner_radius = int(4 * self.label_scale)

+ text_scale = self.label_scale

+

+ label_color_pairs = [

+ (self.label_1, self.color_1),

+ (self.label_2, self.color_2),

+ (self.label_overlap, self.color_overlap),

+ ]

+

+ x0 = margin

+ for text, color in label_color_pairs:

+ if not text:

+ continue

+

+ (text_w, _) = cv2.getTextSize(

+ text=text,

+ fontFace=CV2_FONT,

+ fontScale=self.label_scale,

+ thickness=self.text_thickness,

+ )[0]

+

+ width = text_w + marker_size + padding * 4

+ center_x = x0 + width // 2

+ center_y = y0 + height // 2

+

+ draw_rounded_rectangle(

+ scene=scene,

+ rect=Rect(x=x0, y=y0, width=width, height=height),

+ color=Color.WHITE,

+ border_radius=text_box_corner_radius,

+ )

+

+ draw_rounded_rectangle(

+ scene=scene,

+ rect=Rect(

+ x=x0 + padding,

+ y=center_y - marker_size / 2,

+ width=marker_size,

+ height=marker_size,

+ ),

+ color=color,

+ border_radius=marker_corner_radius,

+ )

+

+ draw_text(

+ scene,

+ text,

+ text_anchor=Point(x=center_x + marker_size, y=center_y),

+ text_scale=text_scale,

+ text_thickness=self.text_thickness,

+ )

+

+ x0 += width + gap

diff --git a/supervision/detection/utils.py b/supervision/detection/utils.py

index f6bcd33bc..a2cbd87bd 100644

--- a/supervision/detection/utils.py

+++ b/supervision/detection/utils.py

@@ -11,6 +11,25 @@

MIN_POLYGON_POINT_COUNT = 3

+def xyxy_to_polygons(box: np.ndarray) -> np.ndarray:

+ """

+ Convert an array of boxes to an array of polygons.

+ Retains the input datatype.

+

+ Args:

+ box (np.ndarray): An array of boxes (N, 4), where each box is represented as a

+ list of four coordinates in the format `(x_min, y_min, x_max, y_max)`.

+

+ Returns:

+ np.ndarray: An array of polygons (N, 4, 2), where each polygon is

+ represented as a list of four coordinates in the format `(x, y)`.

+ """

+ polygon = np.zeros((box.shape[0], 4, 2), dtype=box.dtype)

+ polygon[:, :, 0] = box[:, [0, 2, 2, 0]]

+ polygon[:, :, 1] = box[:, [1, 1, 3, 3]]

+ return polygon

+

+

def polygon_to_mask(polygon: np.ndarray, resolution_wh: Tuple[int, int]) -> np.ndarray:

"""Generate a mask from a polygon.